Will Stamper’s Blog

Can We Do It Better?

June 10, 2015

In a previous post, we looked at why a function that split an array into smaller arrays was so much slower than a different implementation. In this post, we’ll look at bugs in both the slow version and our faster rewrite and see if we can write a new version of the function that is both bug-free and more performant.

Bugs In Our Previous Solutions

For reference, here are the three original functions from the last post.

Original:

original = (arr, size) ->

iter = Math.floor(arr.length / size);

out = []

for i in [0..iter-1] by 1

tmp = []

for x in [0..size-1] by 1

tmp.push arr[x]

arr.splice 0, size

out.push tmp

if arr.length > 0

out.push arr

return outUnexpectedly Slow Version:

slow = (arr, size)->

n = []

i = 0

x = arr.length

while x > 0

n[i] = arr.slice 0,size

arr = arr.splice size

x-=size

i++

return nOur Faster Version:

fast = (arr, size)->

n = []

i = 0

x = arr.length

while x > 0

n[i] = arr.splice 0,size

x-=size

i++

return nNow, the tricky thing here is that Array.prototype.splice is mutative.

That is, it returns an array containing the elements that were removed,

but it actually also modifies the original array in place. Because

arrays are reference types, a modification to the array passed in will

modify the array that the caller uses. So, looking at original(), we can

see that there’s a weird bit at the end where we push any remaining

values into the out array. Because we are not splicing them out, those

values are left in the input array while all other values are removed.

input = [0,1,2,3,4]

output = original input, 2

#input is now [4]

#output is now [[0,1],[2,3]]In our other functions, this behavior is not maintained. Because of the

weirdness with the single-argument splice, slow() will remove all but

the first size entries from input, regardless of the input array’s

length. In our fast solution, it actually

doesn’t leave any remainder because we are using splice correctly

(unlike in slow()), and we don’t have a weird off-by-one hack like

original().

It worth noting that the original behavior makes no sense. There’s no

good reason to leave the remainder in the original array, and I don’t

even think there’s a good reason to modify the original array at all.

However, we’re going to treat that like the behavior is intentional and

desired. We’re also going to ignore the infinite loops when 0 is passed

in for size since those existed in the original code.

Getting Ready to Fix Things: Tests

Now, in my opinion, the best way to fix our code now and get ready to move forward with faster rewrites is to define our desired behavior using tests. I chose to use Mocha and Chai to build my tests.

For our tests, we want to validate that both the output and input arrays are what we would expect. The scenarios we’ll consider:

- Input arrays with an even number of entries and chunk size of 2 (no remainder)

- Input arrays with an odd number of entries and chunk size of 2 (remainder)

- Empty input array with a chunk size of 2

- Input array with n entries with a chunk size of n

- Input array with n entries with a chunk size of n+1

Again, we’ll be ignoring chunk sizes less than or equal to 0 as well as non-integer chunk sizes, since they’re really not handled in our original code at all.

The tests we get for that look like:

describe 'Original', ->

beforeEach ->

@algo = Chunker.original

describe 'Odd number of entries', ->

beforeEach ->

@input = getInput 5

@output = @algo @input, 2

it 'should return the correct number of new arrays', ->

(expect @output.length).to.equal 3

it 'should return the correct values', ->

(expect @output[0]).to.deep.equal [0,1]

(expect @output[1]).to.deep.equal [2,3]

(expect @output[2]).to.deep.equal [4]

it 'should leave behind a remainder in the input', ->

(expect @input).to.deep.equal [4]

describe 'Even number of entries', ->

beforeEach ->

@input = getInput 6

@output = @algo @input, 2

it 'should return the correct number of new arrays', ->

(expect @output.length).to.equal 3

it 'should return the correct values', ->

(expect @output[0]).to.deep.equal [0,1]

(expect @output[1]).to.deep.equal [2,3]

(expect @output[2]).to.deep.equal [4, 5]

it 'should leave behind NO remainder in the input', ->

(expect @input).to.deep.equal []

describe 'Empty input array', ->

beforeEach ->

@input = []

@output = @algo @input, 2

it 'should return an empty array', ->

(expect @output).to.deep.equal []

it 'should leave the input array empty', ->

(expect @input).to.deep.equal []

describe 'Chunk size is equal to array length', ->

beforeEach ->

@input = getInput 5

@output = @algo @input, 5

it 'should return a copy of the input array', ->

(expect @output[0]).to.deep.equal [0,1,2,3,4]

(expect @output.length).to.equal 1

it 'should empty the original array', ->

(expect @input).to.deep.equal []

describe 'Chunk size greater than array length', ->

beforeEach ->

@input = getInput 5

@output = @algo @input, 5

it 'should return a copy of the input array', ->

(expect @output[0]).to.deep.equal [0,1,2,3,4]

(expect @output.length).to.equal 1

it 'should empty the original array', ->

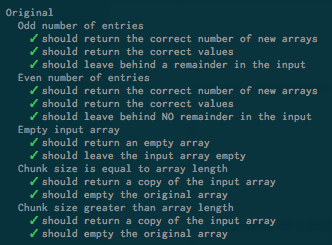

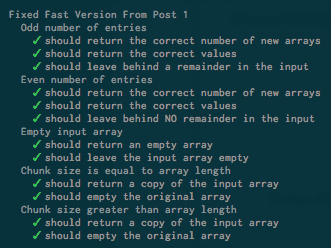

(expect @input).to.deep.equal []And for our output:

Neat! As a positive side effect here, we can make sure that our CoffeeScript rewrites of the original JavaScript hasn’t broken anything we care about.

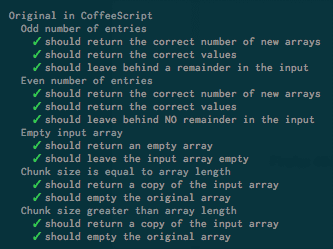

Looks good! We can do the same thing for the slow version we looked at the previous post:

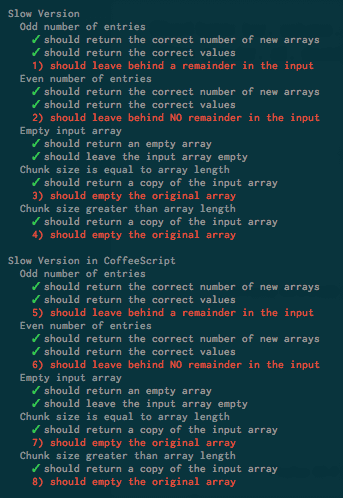

Neat again! We knew that it was broken, but now we’re sure it’s broken in the same way. Now, looking at our fast solution:

We can see where we’re failing. The issue is that we’re finishing up our loop even if the last remaining values are less than a full chunk, emptying out our input array. We can change that to just quit the loop if we detect we’ll have a remainder and then push the remainder onto the result array just like the original.

fastFixed = (arr, size)->

n = []

i = 0

while arr.length >= size

n[i] = arr.splice 0, size

i++

n[i] = arr unless arr.length is 0

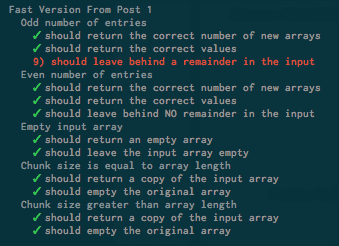

return nAnd, with our tests:

Gross. It works, but it’s not pretty. The good news is, there’s no significant reduction in speed. However, I think we can improve this a lot.

Must Go Faster, Must Go Faster

Now that we have a way to verify that any new version we make is correct, we can quickly work to come up with faster solutions. I think that the original snippet probably had the right idea with the general shape of its code. Counting down by decrementing an index or continuing until the array is empty just rubs me the wrong way. So lets see what we can optimize.

The original code again:

original = (arr, size) ->

iter = Math.floor(arr.length / size);

out = []

for i in [0..iter-1] by 1

tmp = []

for x in [0..size-1] by 1

tmp.push arr[x]

arr.splice 0, size

out.push tmp

if arr.length > 0

out.push arr

return outThe seemingly obvious optimization here is that the inner loop is

unnecessary. Array.prototype.splice is already returning the values that

we’re pushing into tmp, so why not just store those off instead?

replaceInnerLoopWithSplice = (arr, size)->

iter = Math.floor(arr.length / size)

out = []

for i in [0..iter-1] by 1

out.push(arr.splice 0, size)

if arr.length > 0

out.push arr

return outAdding this to our test suite, we can see that the behavior is correct. However, running this through JSPerf, we see that this actually had a negative impact on our performance.

It seems like we’ve somehow dropped off a fast path here. Maybe using the results of the splice prevents some optimization that was happening when it was unused? Nothing seems obviously wrong, but it’s clear we’ll need a new tactic if we want actual gains.

The next obvious improvement is that we’re making a whole lot of changes

to the beginning of our array, meaning we get whole lot of O(n)

shifts. It seems like this should be O(n2), so avoiding that

would be clutch. To do this, we can use slice instead of splice to

non-destructively grab the values we want and then splice out the

non-remainder in one swoop.

sliceThenSplice = (arr, size)->

out = []

end = arr.length

for i in [0..end-1] by size

out.push arr.slice(i, i+size)

entriesToRemove = arr.length - (arr.length % size)

arr.splice 0, entriesToRemove

return outAgain, our tests come up clean. But this time, in JSPerf, we get 50% boost in Chrome, and a whopping 2800% increase in Firefox! In terms of absolute speed, Firefox is actually still behind Chrome. FF

really needs to get on copying Chrome optimizations of splice though. The

difference is enormous!

Finally, we saw above that using splice instead of a looped copy was

actually slower. Could that also be the case for slice?

grabThenSplice = (arr, size)->

out = []

end = arr.length

for i in [0..end-1] by size

temp = []

for j in [0..size-1] by 1 when i+j < end

temp.push arr[i+j]

out.push temp

entriesToRemove = arr.length - (arr.length % size)

arr.splice 0, entriesToRemove

return outAll tests are clean again, and in JSPerf, it seems like Chrome actually got slower by about 10%, while Firefox improved by about 50%, passing Chrome in absolute performance.

Conclusions

Overall, we made some pretty solid gains. Our fastest version ran 2.5

times faster than the original in Chrome and a massive 89 times faster

in Firefox. It seems like slice and splice could use a bit of tuning in

Firefox, but that might be easier said than done.

I think we’ve pretty much peaked on performance at this point. We’re accessing each index a single time and removing n entries a single time, both of which are required by our test cases. Any optimization from this point is just going to be trying to have the loop hit a sweet spot in the JavaScript engine.